Loss Function - Cross Entropy

cross entropy mostly used for classification task in neuron network, for example binary classification or multi-classification

Talk about entropy first

A good video explain what is entropy well [1]

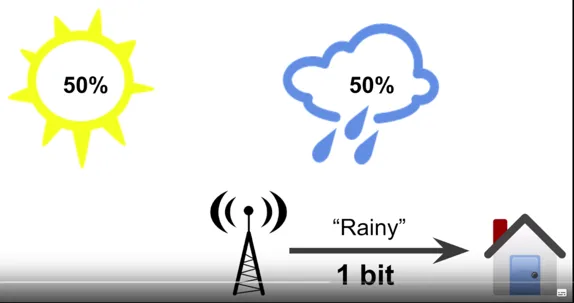

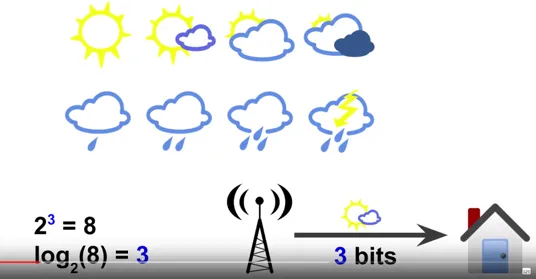

An example from weather station

A weather station reports a 50/50 change of rain or sunshine, we can use 1 bit to represent the weather information. If we have more weather status, we can use more bit to represent it, for example there are 8 weather status, we can use 3 bits to represent it.

Here's the formula:

-

50/50 sunny or raining:

, so use 1 bits

-

8 weather status:

, so use 3 bits

Then what is the probability is not equal ... ?

| event | probability | entropy |

|---|---|---|

| sunny | 0.75 | |

| raining | 0.25 | |

| total: |

As a result, we can calculate the entropy by the following formula:

where

: the true probability distribution : the predicted probability distribution

it doesn't matter log base 2 or base e, in most cast if you are calculating the digital information or communication, base 2 is preferred, but for other question, we can use another log base.

Now it's cross entropy turn

Cross entropy measures the difference between two probability distributions.

Now, we take a machine learning problem, we need to use a loss function to evaluate how close the predicted value and actual value, so that we can compensate the error back to the model, to enhance the accuracy.[2]